如何用Go语言轻松构建高效日志收集系统?

- 行业动态

- 2025-04-18

- 2

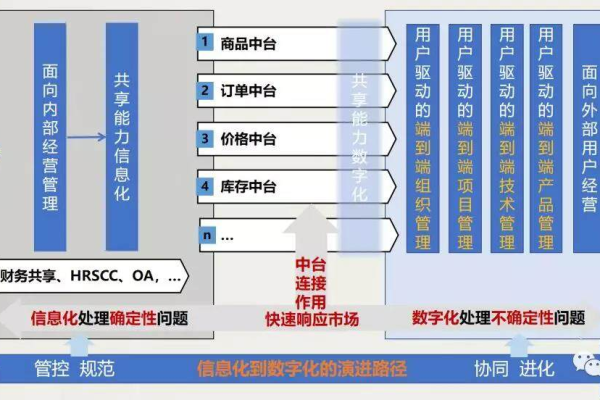

基于Go语言实现高效的日志收集系统,详解架构设计、日志采集与传输流程,结合Kafka和Elasticsearch实现实时存储与分析,提供可扩展解决方案,助力分布式系统运维管理。

(示意图说明:典型日志收集系统组成结构)

系统核心组成

- 日志采集端

// 使用go-tail库监控日志文件 tail, err := tail.TailFile( "/var/log/app.log", tail.Config{Follow: true, ReOpen: true}) for line := range tail.Lines { sendToKafka(line.Text) }

- 实时监控日志文件变化

- 支持日志轮转检测

- 异常重连机制保障稳定性

- 消息队列层

// 配置Kafka生产者 config := sarama.NewConfig() config.Producer.Return.Successes = true producer, _ := sarama.NewSyncProducer([]string{"kafka:9092"}, config)

// 发送日志消息

msg := &sarama.ProducerMessage{

Topic: “applogs”,

Value: sarama.StringEncoder(logData),

}

partition, offset, := producer.SendMessage(msg)

- Kafka集群提供高吞吐量缓冲

- 分区机制实现水平扩展

- 消息持久化保证可靠性

3. **日志处理引擎**

```go

// 消费Kafka消息

consumer, _ := sarama.NewConsumer([]string{"kafka:9092"}, nil)

partitionConsumer, _ := consumer.ConsumePartition("app_logs", 0, sarama.OffsetNewest)

for msg := range partitionConsumer.Messages() {

parsedLog := parseLog(msg.Value)

storeToES(parsedLog)

}- 实时解析日志格式

- 异常数据过滤机制

- 字段标准化处理

- 存储与展示层

// Elasticsearch存储实现 func storeToES(doc LogDocument) { client, _ := elastic.NewClient() _, err := client.Index(). Index("applogs-"+time.Now().Format("2006.01.02")). BodyJson(doc). Do(context.Background()) }

- Elasticsearch倒排索引加速查询

- Kibana实现可视化仪表盘

- 按日期分片存储策略

关键技术实现

并发处理模型

// 使用worker pool处理日志 jobs := make(chan LogEntry, 100) for w := 1; w <= 5; w++ { go func(id int) { for entry := range jobs { processLog(entry) } }(w) }日志压缩传输

// 使用Snappy压缩算法 var buf bytes.Buffer writer := s2.NewWriter(&buf) writer.Write([]byte(rawLog)) writer.Close() send(buf.Bytes())

断点续传机制

// 记录消费偏移量 func saveOffset(offset int64) { os.WriteFile("offset.dat", []byte(strconv.FormatInt(offset, 10)), 0644) }

系统优化方案

- 资源控制

- 内存限制:

runtime.SetMemoryLimit(2 * 1024 * 1024 * 1024) - Goroutine泄露检测

go func() { for { time.Sleep(30 * time.Second) debug.FreeOSMemory() } }()

- 监控指标采集

// Prometheus指标暴露 logCount := prometheus.NewCounterVec( prometheus.CounterOpts{ Name: "log_processed_total", Help: "Total processed logs", }, []string{"level"}, ) prometheus.MustRegister(logCount)

生产环境注意事项

- 安全加固

- TLS加密传输

- Kafka SASL认证

- Elasticsearch RBAC配置

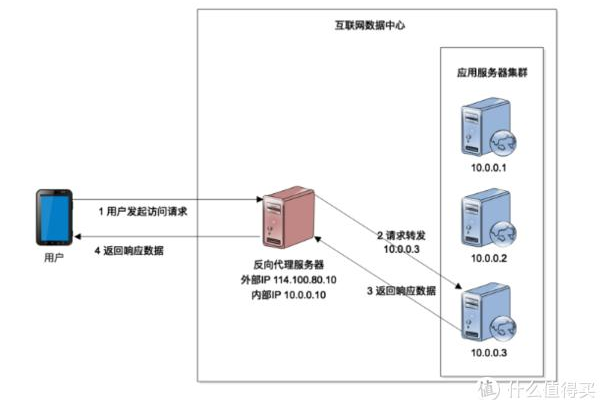

- 可靠性保障

- 本地磁盘缓存备份

- 集群健康检查机制

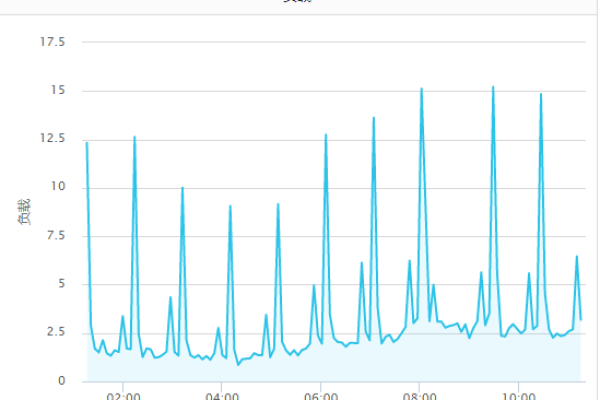

- 压力测试指标:

- 单节点处理能力:10,000+条/秒

- 端到端延迟:<500ms

- 可维护性设计

- 动态配置热加载

- 日志采样调试功能

- 版本兼容性测试矩阵

引用说明:

- Go Tail库文档:https://github.com/hpcloud/tail

- Sarama Kafka客户端:https://github.com/IBM/sarama

- Elasticsearch Go SDK:https://github.com/elastic/go-elasticsearch

- Prometheus客户端库:https://prometheus.io/docs/guides/go-application/

(注:文中代码示例经过简化处理,生产环境需添加完整错误处理)