如何正确配置MySQL数据库驱动并成功上传连接驱动?

- 行业动态

- 2024-09-06

- 10

在数字化时代,数据库扮演着数据存储和处理的关键角色,MySQL作为一个广泛应用在全球的开源关系型数据库管理系统,常常是开发者和企业的首选,下面将深入探讨如何配置MySQL数据库驱动以及如何上传MySQL数据库连接驱动,确保顺畅的数据库连接和高效的数据处理:

1、配置MySQL数据库驱动

下载并安装MySQL Connector:先需要下载MySQL Connector/J,它是Java应用程序连接MySQL数据库的关键桥梁。 通过MySQL官方网站或Maven仓库下载对应版本的驱动包(mysqlconnectorjavax.x.xx.jar)。

添加到类路径中:将下载的JAR文件添加到Java项目的类路径中,如果你使用的是IDE如IntelliJ IDEA,可以将JAR文件放置到项目的lib目录下,并添加至项目的依赖项中。

使用构建工具管理依赖:如果你的项目使用Maven或Gradle等构建工具,可以在项目的pom.xml或build.gradle文件中添加MySQL驱动的依赖,这样,构建工具会自动处理依赖下载和管理。

配置数据库连接池:在企业应用中,通常需要配置数据库连接池来优化数据库连接的使用,如HikariCP、C3P0等,在此配置中指定MySQL驱动类名(com.mysql.cj.jdbc.Driver)确保正确加载驱动。

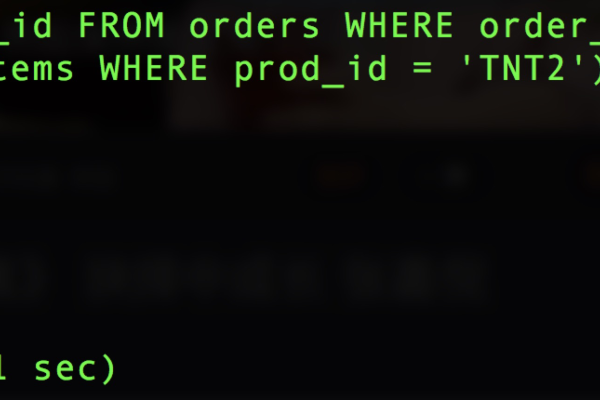

测试数据库连接:配置完成后,建立一个简易的Java程序来测试数据库连接,可以使用如下代码测试:

try {

Class.forName("com.mysql.cj.jdbc.Driver");

Connection conn = DriverManager.getConnection("jdbc:mysql://localhost:3306/dbname", "username", "password");

System.out.println("Connected to MySQL database");

} catch (Exception e) {

e.printStackTrace();

}

2、上传MySQL数据库连接驱动

获取驱动包:确保下载的驱动包与你的MySQL数据库版本兼容,可以从MySQL社区下载页面选择适合您操作系统和JDK版本的驱动包。

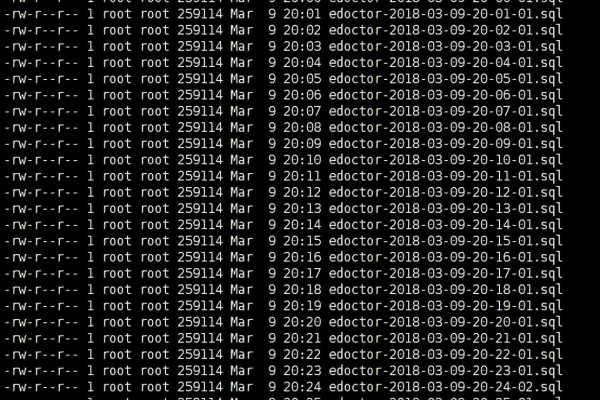

上传到服务器:如果应用部署在远程服务器上,需要将驱动包上传到服务器,可使用FTP工具如FileZilla,或者直接通过SCP命令(在Linux环境中)上传。

安装到容器环境:在Docker等容器化部署的情况下,需要在Dockerfile中使用COPY或ADD指令将驱动包添加到镜像中,或在Kubernetes部署中通过configMap或存储卷来挂载驱动包。

导入到应用服务器:如果是在Web应用服务器如Tomcat或JBoss中部署,需要将驱动包放到服务器的指定目录,如Tomcat的/lib目录下。

更新应用服务器配置:某些应用服务器需要更新其配置文件,以确保它们能够识别并使用新添加的驱动,在Tomcat中可能需要在catalina.properties文件中添加驱动的预加载信息。

3、理解JDBC和MySQL驱动的关系

JDBC API的作用:JDBC(Java Database Connectivity)为Java程序提供了统一访问各种类型数据库的方式,它包含在java.sql包中,是Java应用程序与数据库之间交互的桥梁。

驱动程序的选择:对于不同的数据库,需要对应的JDBC驱动实现,MySQL Connector/J就是针对MySQL数据库的JDBC驱动实现,它使得Java程序能够连接到MySQL数据库。

兼容性考虑:在选择MySQL驱动时,要考虑到驱动版本与MySQL数据库版本的兼容性,避免因版本不匹配导致的连接问题。

4、使用IDE集成开发环境的优势

简化配置过程:像IntelliJ IDEA这样的IDE提供了图形界面帮助开发者简化数据库连接和驱动配置过程,只需几步就可以建立数据库连接,并且直观地管理项目依赖。

自动管理依赖:使用IDE集成的构建工具(如Maven插件),可以自动处理依赖库的下载和管理,减少了手动操作的繁琐。

便捷的测试与调试:IDE内建的数据库工具支持直接执行SQL语句,方便开发人员进行数据库操作的测试和调试。

5、常见错误与解决方案

驱动类找不到异常:确保驱动JAR包已正确添加到类路径中,并且驱动的类名拼写正确。

数据库连接失败:检查数据库URL、用户名、密码是否正确,以及数据库服务是否运行中,网络是否通畅。

版本不兼容问题:如果遇到版本不兼容的问题,尝试更换驱动版本或升级数据库系统。

了解如何配置及上传MySQL数据库连接驱动对于Java开发者至关重要,通过上述步骤和注意事项,可以确保Java应用程序顺利连接到MySQL数据库,发挥数据管理的最大效能,接下来将探讨一些相关的FAQs,以进一步巩固理解和应用。

相关FAQs:

1、为什么选择MySQL Connector/J作为驱动?

MySQL Connector/J是由MySQL公司官方提供的JDBC驱动,它不仅兼容MySQL的所有版本,还持续更新以支持最新的Java特性,其性能经过优化,是连接Java应用和MySQL数据库的首选驱动。

2、如何确保驱动与MySQL数据库版本的兼容性?

在下载驱动时,应选择与您的MySQL数据库版本兼容的驱动版本,驱动的文档或官网会提供兼容性列表,进行前期的连接测试也是确保兼容性的好方法。

通过上述深入分析,相信您对如何在Java环境中配置和上传MySQL数据库连接驱动有了全面的了解,正确的驱动配置是确保数据库高效稳定运作的基础,而掌握相关知识是每一位开发者必备的技能。