如何有效利用1D卷积神经网络(Conv1D)在时间序列分析中提升性能?

- 行业动态

- 2024-08-21

- 3016

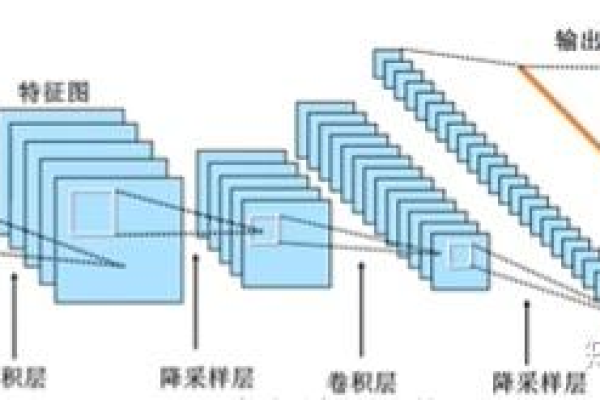

Conv1D是一种用于处理序列数据的卷积神经网络层,通常在自然语言处理和时间序列分析中使用。它通过在输入序列的一维方向上滑动滤波器来提取特征,有助于捕捉局部依赖关系。

Convolutional 1D (Conv1D)

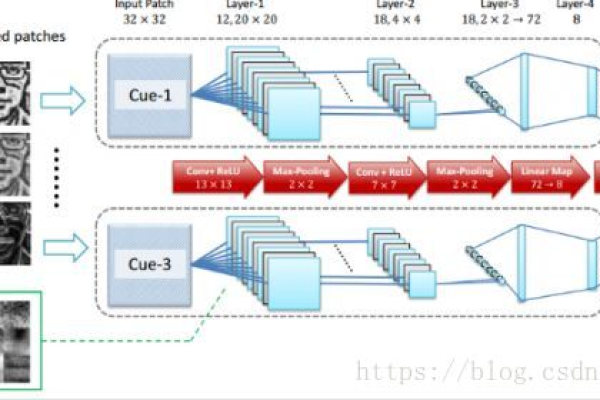

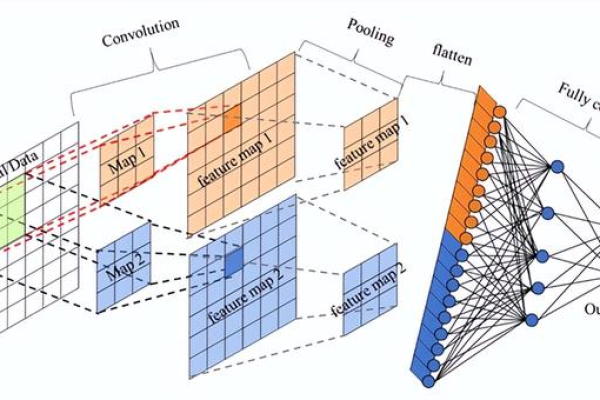

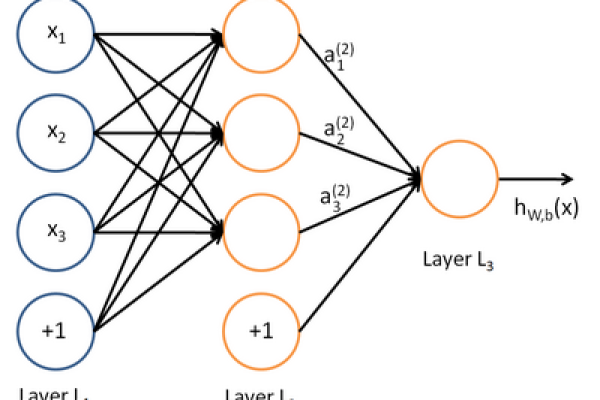

Conv1D, or onedimensional convolutional layer, is a crucial component in deep learning models designed to process sequential data. This layer operates by applying a set of learned filters, known as kernels, to the input data, transforming it into an output with a higher level of abstraction. Unlike general convolutional layers used in image processing which operate on twodimensional matrices, Conv1D specifically deals with onedimensional sequence data, making it particularly useful for tasks involving time series analysis, natural language processing, and signal processing.

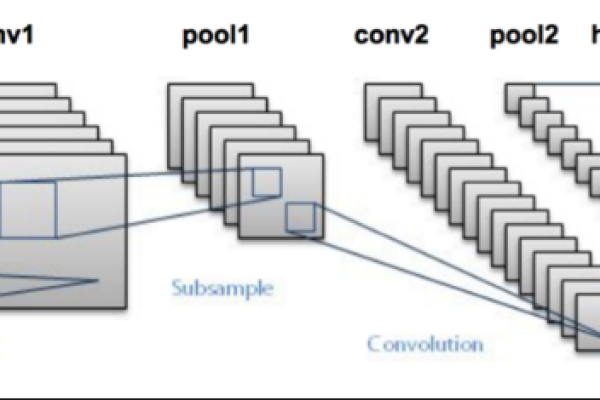

Principles of Operation

The operation of Conv1D can be dissected into several key components:

Input and Output Channels

The input and output channels define the number of dimensions in the input data and the transformed output data respectively. For instance, if an input sequence has ‘n’ features at each time step, the input channel would be ‘n’. The output channels denote the number of filters that will be applied to the input, effectively determining the dimensionality of the output space

Kernel Size

The kernel size refers to the length of the filter that will be applied to the input sequence. This determines how many time steps of the input sequence will be considered at each application of the convolutional operation. A larger kernel size allows the network to capture longer temporal dependencies but also increases the model complexity.

Stride and Padding

Stride controls the step size at which the kernel is applied across the input sequence. A stride of 1 means the kernel will be applied to every time step of the input without skipping any. Padding, on the other hand, is a technique to control the output sequence length by adding extra zeroes to the input sequence ends. This can maintain the length of the output or counteract the reduction in sequence length caused by striding.

Dilation

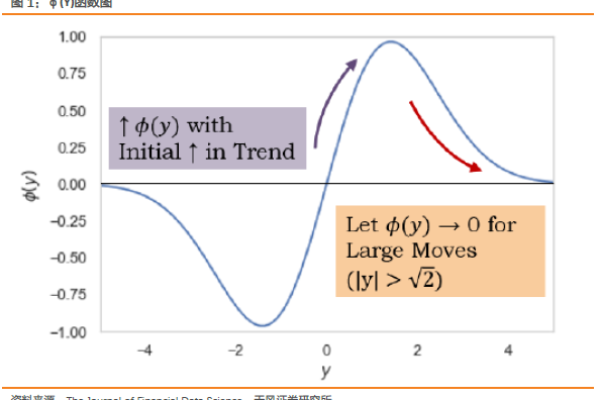

Dilation factor defines the spacing between kernel elements. It effectively expands the kernel, allowing it to have "larger receptive fields" without increasing the parameter count. This is particularly useful for capturing longrange dependencies in the data.

Application Scenarios

The utility of Conv1D extends to various domains:

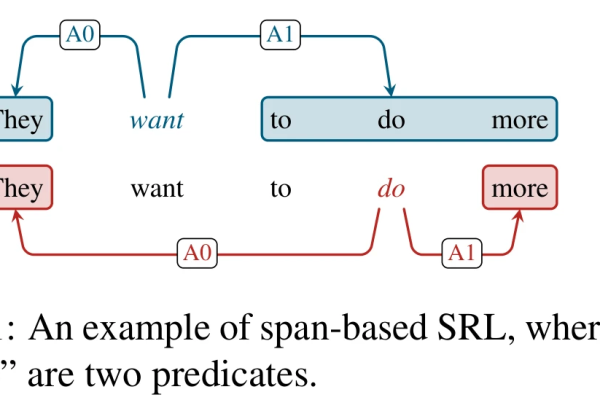

Natural Language Processing (NLP)

In NLP, Conv1D layers are used to process text data, where words or phrases can be thought of as sequences. These layers help in extracting ngram features or more complex semantic patterns from text.

Time Series Analysis

For time series data, Conv1D can capture temporal dependencies and patterns over time, making it suitable for forecasting or classification tasks in various industries such as finance and climate science.

Signal Processing

Conv1D is also applicable to signal processing tasks, where the input can be a onedimensional signal like audio waves or sensor data. The layer helps in feature extraction and pattern recognition within these signals.

Code Example

Consider a simple example using PyTorch to demonstrate how to use Conv1D:

import torch.nn as nn Assuming an input sequence of length 35 with 256 features at each time step input_sequence = torch.randn(35, 256) Defining a Conv1D layer with 2 input channels, 3 output channels, and a kernel size of 5 conv1d_layer = nn.Conv1d(in_channels=2, out_channels=3, kernel_size=5) Applying the Conv1D layer to the input sequence output_sequence = conv1d_layer(input_sequence)

This code initializes a Conv1D layer and applies it to a random input sequence. The output would be a tensor with the transformed features based on the defined kernel and other parameters.

Comparison with Linear Layers

While both Conv1D and linear layers serve the purpose of transforming input data into higherlevel features, they operate quite differently. Linear layers create a direct linear mapping from inputs to outputs, whereas Conv1D layers apply a set of learnable filters across the input, preserving the sequence structure and capturing complex patterns within it.

Table Summary

The following table summarizes the key attributes of a Conv1D layer:

| Attribute | Description | Example Value |

| Input Channels | Number of input data dimensions | 2 |

| Output Channels | Number of output data dimensions | 3 |

| Kernel Size | Length of the convolutional filter | 5 |

| Stride | Step size for applying the filter | 1 |

| Padding | Extra zeroes added to input ends | 0 |

| Dilation | Spacing between kernel elements | 1 |

By understanding these attributes, one can tailor the Conv1D layer to fit specific requirements of a given task.

Conclusion

Conv1D layers provide a powerful tool for processing and analyzing sequential data in various domains. Their ability to capture temporal patterns and dependencies makes them indispensable in modern deep learning architectures. By carefully choosing its parameters and integrating it within a larger network, practitioners can leverage this layer to achieve stateoftheart performance on a wide range of problems.

FAQs

Q1: How does the kernel size affect the output of a Conv1D layer?

A1: The kernel size determines how many time steps of the input sequence are considered at once when applying the convolution operation. A larger kernel size allows the model to capture longer temporal dependencies within the sequence, which can be beneficial for understanding more complex patterns. However, it also increases the number of parameters the layer needs to learn, potentially making the model more computationally expensive and prone to overfitting without sufficient data.

Q2: Can padding be used to control the output sequence length?

A2: Yes, padding is a technique used to control the length of the output sequence by adding extra zeroes to the beginning and end of the input sequence. This can counteract the effect of strides that reduce the length of the output or can be used to intentionally maintain the same length as the input, depending on the specific needs of the model architecture and the task at hand.

本站发布或转载的文章及图片均来自网络,其原创性以及文中表达的观点和判断不代表本站,有问题联系侵删!

本文链接:http://www.xixizhuji.com/fuzhu/136559.html